Virtual screening (VS) is nowadays a standard step before wet-lab experiments in drug discovery [1, 2]. VS involves calculating the estimated affinities and plausible binding modes of many drug candidates, other drug-like small molecules, or fragments of the former when binding onto a given protein, which is used for short-listing prominent candidates. Even though VS is much cheaper than the lab experiments, it requires investing on the proper High-Performance Computing (HPC) infrastructure in order to enable screening of large ligand libraries. The feasibility of a VS experiment on a given infrastructure can be measured in terms of how long the experiment takes. The longer the calculation time, the less feasible an experiment can become due to practical reasons. Especially, time is directly proportional to cost when performing VS on pay-per-use infrastructures.

The aim of this paper is to provide a concise review together with experimental analysis of the impact of variations in the VS experiment setup and the types of HPC infrastructures on the execution time. This enables a well-informed decision by biochemists when setting up their experiments on HPC platforms. In the experiment set-up, we specifically look at the properties of individual ligands as well as Vina configuration parameters. We use libraries of known and available drugs that are common in biomedical studies. These libraries are also suitable for our purpose because they show a high variety on ligands properties that influence computing time (see “Compound libraries” section).

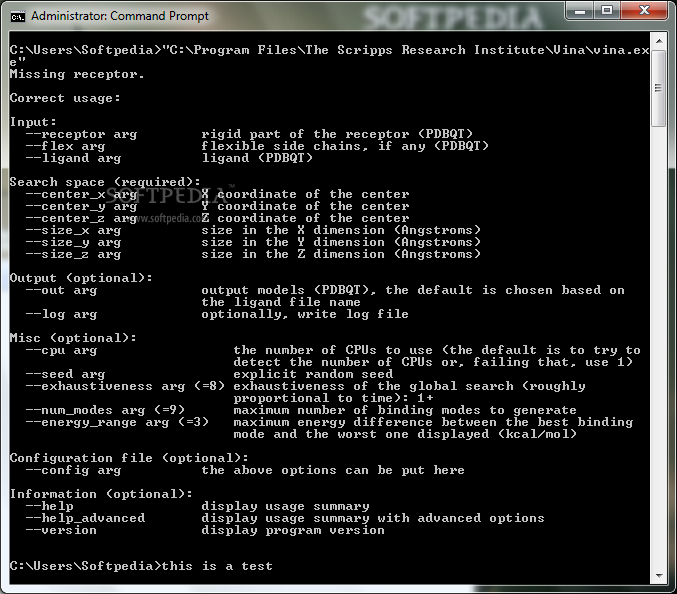

Autodock vina free download. AutoDock Vina 1.1.2 - 64-bit Utilizing opensource code of AutoDock Vina 1.1.2 by Dr. Oleg Trott, a working binaries for vina. AutoDock Vina Publisher's description. From Oleg Trott. AutoDock Vina is a new open-source program for drug discovery. AutoDock Vina is a new open-source program for drug discovery, molecular docking and virtual screening, offering multi-core capability, high performance and enhanced accuracy and ease of use. AutoDock Vina is one of the two generations of distributions of AutoDock. This software uses a sophisticated gradient optimization method in its local optimization procedure. The calculation of the gradient effectively gives the optimization algorithm a “sense of direction” from a single evaluation.

Currently more than fifty software packages are available for protein-ligand docking, for example AutoDock Vina [3], Glide [4], FlexX [5], GOLD [6], DOCK [7], to name a few. Also, various methods have been developed to speed up their execution [8, 9, 10, 11, 12]. We take AutoDock Vina as a typical, arguably most popular, molecular docking tool available for virtual screening. Popularity is explained by being free and the quality of the results, especially for ligands with 8 or more rotatable bonds [13]. Although this paper is based on AutoDock Vina, the findings reported here possibly also apply to similar software packages and analogous types of experiments. For brevity, we will refer to AutoDock Vina simply as Vina in the rest of the paper.

Paper structure In the rest of this section, we introduce the basic concepts and features of Vina required for setting up a VS experiment. We then explain the characteristics of the four types of computing infrastructures used to run our experiments. The Methods section presents the ligands and proteins used in the experiments, their various set-ups and configurations, as well as the details of how we ran the experiments on each infrastructure. A complete description of our findings is given in the “Results and discussion” section.

AutoDock Vina

AutoDock Vina [3] is a well-known tool for protein-ligand docking built in the same research lab as the popular tool AutoDock 4 [14, 15]. It implements an efficient optimization algorithm based on a new scoring function for estimating protein-ligand affinity and a new search algorithm for predicting the plausible binding modes. Additionally, it can run calculations in parallel using multiple cores on one machine in order to speed up the computation. In this paper, we adopt the following terminology (the italicized terms).

One execution of Vina tries to predict where and how a putative ligand can best bind to a given protein, in which Vina may repeat the calculations several times with different randomizations (the configuration parameter exhaustiveness controls how many times to repeat the calculations). The part of the protein surface where the tool attempts the binding is specified by the coordinates of a cuboid, to which we refer as the docking box. This is called the “search space” in the Vina manual.

By default, redoing the same execution on the same ligand-protein pair can produce varying binding modes because of the randomized seeding of the calculations. Nevertheless, Vina allows the user to explicitly specify an initial randomization seed, so that the docking results can be reproduced.

Since the repeated calculations in one execution are independent, Vina can perform them in parallel on a multi-core machine. To do so, it creates multiple threads: the threads inside a program will run in parallel whenever the cores are free. The maximum number of simultaneous threads can be controlled when starting the docking experiment (using command-line option cpu). By default, Vina tries to create as many threads as the number of available cores.

Infrastructures

High-performance computing infrastructures with several levels of computational capacity are typically available to researchers today. In the simplest case, one can take advantage of personal computer’s multiple cores to speed up, or scale an experiment. Nowadays, small and medium research groups and enterprises can afford compelling computers with tens of cores. Another alternative is to use accelerators, i.e., hardware that can be used next to the central processor (CPU) to accelerate computations. Examples are graphical processing units (GPU) and Intel’s recent development called Xeon Phi, which can have hundreds of (special-purpose) processing cores. In the extreme case, supercomputers with millions of cores can be used. It is however very economical to make a network of “ordinary” computers and use a so-called middleware to distribute the jobs among the available computing cores. This is called distributed computing. We use the term cluster to refer to a network of computers that are geographically in the same place, and the term grid for a network of geographically scattered computers and clusters. A widely used middleware for clusters is called portable batch system (PBS), which is capable of queuing incoming jobs and running them one after the other. A more advanced middleware is Hadoop [16], which has efficient file management and automatically retries failed or stalled jobs, thus greatly improving the overall success rate. Finally, grids may constitute dedicated resources (e.g., using gLite [17] middleware) or volunteered personal computers connected via internet (e.g., BOINC [18]).

Cloud The main idea of cloud computing is to use virtualized systems. It means that organizations do not have to invest upfront to buy and maintain expensive hardware. Instead, they can use hardware (or software services running on that hardware) that is maintained by the cloud providers. It is possible to use a single virtual machine or create a cluster on the cloud. Hadoop clusters are usually among the standard services offered by commercial cloud providers. With these pay-as-you-go services, cloud users pay only whenever they use the services and for the duration of use. Although in this paper we used physical grid and cluster infrastructures, the results are generally applicable also to analogous virtual infrastructures.

Autodock Vina Pymol Plugin

These resources include four different types of infrastructures that are representative of alternatives typically available to scientists worldwide. Table 1Pymol Autodock Vina Plugin Download

17].Table 1Characteristics of the infrastructures used in the experiments

Total cores | CPU speed (GHz) | Memory per core (GB) | CPU types | ||

|---|---|---|---|---|---|

Min | Max | ||||

Single machine (on HPC cloud) | 8 | 2.13 | 1 | Intel Xeon | |

AMC local cluster | 128 | 2.3 | 4 | AMD Opteron | |

Dutch Academic Hadoop cluster | 1464 | 1.9 | 2.0 | ≥6 | Intel Xeon - AMD Opteron |

Dutch Academic grid | >10,000 | 2.2 | 2.6 | ≥4 | Intel Xeon - AMD Opteron |

Autodock Vina Manual

Note Because the hardware characteristics of the exploited infrastructures are very diverse (see Table 1), it is unfair to directly compare them based on the execution times. The usage of these infrastructures in our experiments is meant as illustration for a variety of case studies, and not as a benchmark.